Okay, okay…I guess the only feeling up you’re gonna get is if you use a nipple to control the multi-touch, but I digress. Apple today filed an interesting patent application titled Multi-touch Data Fusion that fuses their popular touch-screen interface with voice commands, visual input through a camera, motion sensors or even force sensors for a more interactive and intuitive user experience.

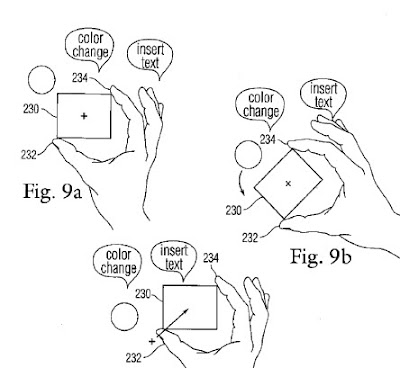

The combination of multi-touch and voice recognition could dramatically speed up workflow. You could select some text or an image and issue concurrent voice commands instead of then having to locate the required tool. Functions like changing fonts and colors, resizing, rotating or simply copying and pasting on the iPhone could be made much easier.

A combination of multi-touch and accelerometer data could give iPhone gamers greater control over the motion of characters. Force sensors could be used with multi-touch for applying a deformation effect to an image or when playing a virtual instrument – strength being determined by the level of force applied in an action.

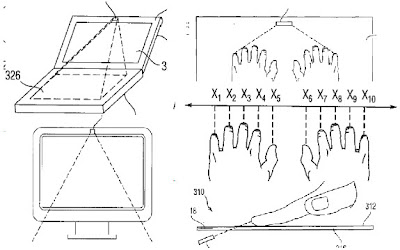

The more impressive combination (but also the most unlikely), would be a fusion of multi-touch and visual input. This method would use a camera to trace hand movements and pick up gestures that can be converted into commands. This opens up a lot of possibilities like Gaze Interaction or complex hand gesturing that may become a kind of geek sign language. Only time will tell. Air typing, anyone?

Sources:

USPTO Patent App and UnwiredView

Via: Gizmodo